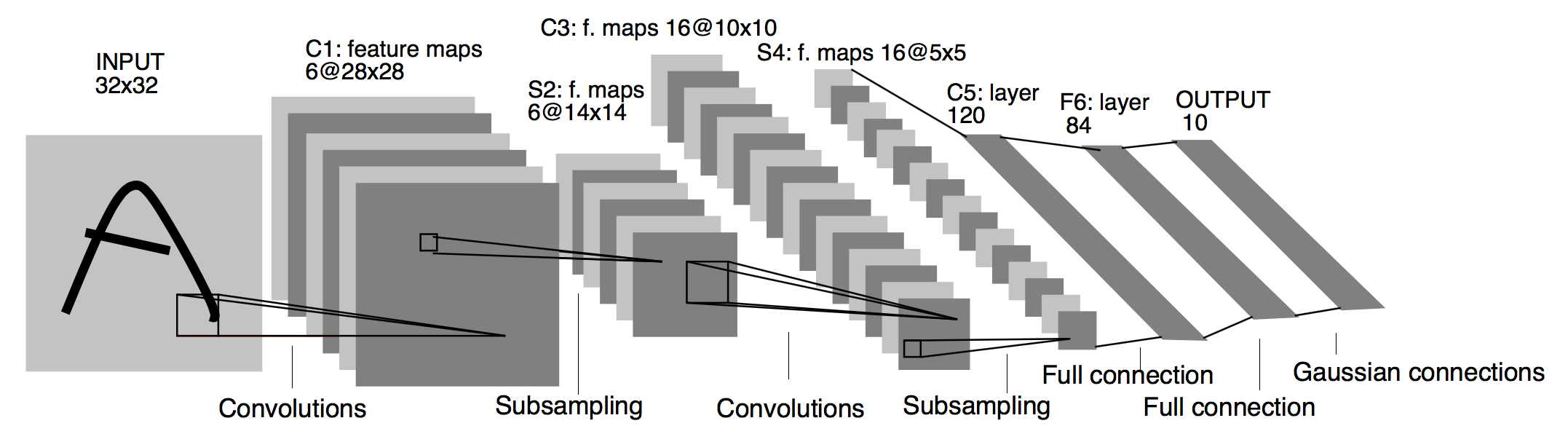

MNIST (LeNet)

- 5 계층 구조: Conv-Pool-Conv- Pool-Conv-FC-FC(SM)

- 입력 : 32x32 필기체 숫자 영상 (MNIST 데이터)

- 풀링 : 가중치x(2x2블록의 합) + 편차항

- 시그모이드 활성화 함수 사용

- 성능: 오차율 0.95%(정확도: 99.05%)

Library Import

1

2

3

4

5

6

7

8

from tensorflow.keras import Model

from tensorflow.keras.layers import Conv2D, MaxPool2D, Flatten, Dense

from tensorflow.keras.callbacks import EarlyStopping, TensorBoard

from tensorflow.keras.datasets import mnist

import numpy as np

import matplotlib.pyplot as plt

plt.style.use('seaborn')

Data Load

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# Data Load

(X_train, y_train), (X_test, y_test) = mnist.load_data()

print(X_train.shape)

print(y_train.shape)

print(X_test.shape)

print(y_test.shape)

# 모델에 이미지를 넣어줄 땐 컬러(channel=3)가 아닌 흑백 이미지(channel=1)

# 텐서 모양이 4개가 있어야 함

X_train, X_test = X_train[..., np.newaxis], X_test[..., np.newaxis]

print(X_train.shape)

print(y_train.shape)

print(X_test.shape)

print(y_test.shape)

1

2

3

4

5

6

7

8

9

10

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz

11490434/11490434 [==============================] - 2s 0us/step

(60000, 28, 28)

(60000,)

(10000, 28, 28)

(10000,)

(60000, 28, 28, 1)

(60000,)

(10000, 28, 28, 1)

(10000,)

1

2

3

# 첫 번째 이미지

plt.imshow(X_train[0, :, :, 0])

plt.show()

Image Preprocessing

normalization : 0 ~ 255 사이 값을 0 ~ 1로 값을 변경

1

X_train, X_test = X_train / 255.0, X_test / 255.0

Model

1

2

3

num_class = 10 # 0 ~ 9까지의 10개의 class

epochs = 30

batch_size = 32

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

class LeNet5(Model):

# Model 정의

def __init__(self, num_class):

super(LeNet5, self).__init__()

self.conv1 = Conv2D(filters=6, kernel_size=(5, 5), padding='same', activation='relu')

self.conv2 = Conv2D(filters=16, kernel_size=(5, 5), activation='relu')

self.max_pool = MaxPool2D(pool_size=(2, 2))

self.flatten = Flatten()

self.dense1 = Dense(units=120, activation='relu')

self.dense2 = Dense(units=84, activation='relu')

self.dense3 = Dense(units=num_class, activation='softmax')

# Model Call

def call(self, input_data):

# Input data -> Conv2D -> MaxPool2D

x = self.max_pool(self.conv1(input_data))

# Conv2D -> MaxPool2D

x = self.max_pool(self.conv2(x))

x = self.flatten(x)

# Flatten -> Dense1 -> Dense2 -> Dense3

x = self.dense3(self.dense2(self.dense1(x)))

return x

1

model = LeNet5(num_class)

1

2

3

4

# Model Compile

model.compile(optimizer='sgd',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

1

2

3

# Callbacks

callbacks = [EarlyStopping(patience=3, monitor='val_loss'),

TensorBoard(log_dir='./logs', histogram_freq=1)]

1

2

3

4

5

6

# Model Fit

model.fit(X_train, y_train,

batch_size=batch_size,

epochs=epochs,

validation_data=(X_test, y_test),

callbacks=callbacks)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

Epoch 1/30

1875/1875 [==============================] - 19s 5ms/step - loss: 0.4899 - accuracy: 0.8537 - val_loss: 0.1340 - val_accuracy: 0.9595

Epoch 2/30

1875/1875 [==============================] - 9s 5ms/step - loss: 0.1245 - accuracy: 0.9615 - val_loss: 0.1257 - val_accuracy: 0.9611

Epoch 3/30

1875/1875 [==============================] - 8s 5ms/step - loss: 0.0914 - accuracy: 0.9720 - val_loss: 0.0632 - val_accuracy: 0.9805

Epoch 4/30

1875/1875 [==============================] - 9s 5ms/step - loss: 0.0737 - accuracy: 0.9776 - val_loss: 0.0630 - val_accuracy: 0.9795

Epoch 5/30

1875/1875 [==============================] - 8s 5ms/step - loss: 0.0634 - accuracy: 0.9804 - val_loss: 0.0517 - val_accuracy: 0.9825

Epoch 6/30

1875/1875 [==============================] - 8s 4ms/step - loss: 0.0562 - accuracy: 0.9824 - val_loss: 0.0435 - val_accuracy: 0.9854

Epoch 7/30

1875/1875 [==============================] - 8s 4ms/step - loss: 0.0502 - accuracy: 0.9843 - val_loss: 0.0575 - val_accuracy: 0.9813

Epoch 8/30

1875/1875 [==============================] - 9s 5ms/step - loss: 0.0455 - accuracy: 0.9855 - val_loss: 0.0500 - val_accuracy: 0.9809

Epoch 9/30

1875/1875 [==============================] - 8s 4ms/step - loss: 0.0407 - accuracy: 0.9870 - val_loss: 0.0378 - val_accuracy: 0.9872

Epoch 10/30

1875/1875 [==============================] - 8s 4ms/step - loss: 0.0374 - accuracy: 0.9882 - val_loss: 0.0362 - val_accuracy: 0.9885

Epoch 11/30

1875/1875 [==============================] - 8s 4ms/step - loss: 0.0343 - accuracy: 0.9892 - val_loss: 0.0405 - val_accuracy: 0.9871

Epoch 12/30

1875/1875 [==============================] - 8s 4ms/step - loss: 0.0314 - accuracy: 0.9899 - val_loss: 0.0391 - val_accuracy: 0.9870

Epoch 13/30

1875/1875 [==============================] - 9s 5ms/step - loss: 0.0290 - accuracy: 0.9908 - val_loss: 0.0397 - val_accuracy: 0.9871

1

%tensorboard --logdir logs